Pentaho Jobs Extraction Prerequisites

This topic briefs about the Pentaho assessment jobs extraction prerequisites.

In This Topic:

Introduction

LeapLogic Assessment profiles existing inventory, identify complexity, lineage and provides comprehensive recommendations for migration to modern data platform.

LeapLogic requires Pentaho jobs and transformations to be exported in ZIP format. Below are the steps for exporting all objects from Pentaho. There are two ways to export Pentaho Jobs. The first method is by using the Spoon UI. The other method for bulk export is by using the command-line tool.

Pentaho Spoon (Pentaho Data Integration or PDI) allows you to manually export individual jobs/ transformations from the Spoon UI using the “File” menu. Here’s how you can export a job/ transformation using Pentaho Spoon:

- Launch Spoon (Pentaho Data Integration).

- Open the job you want to export. If you don’t have the job created yet, you can create a new one by going to File -> New -> Job.

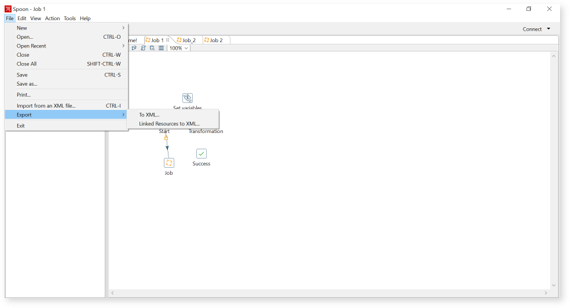

- Once you have the Job open, go to the “File” menu.

- Click Export and further “Linked Resources to XML” in the drop-down menu.

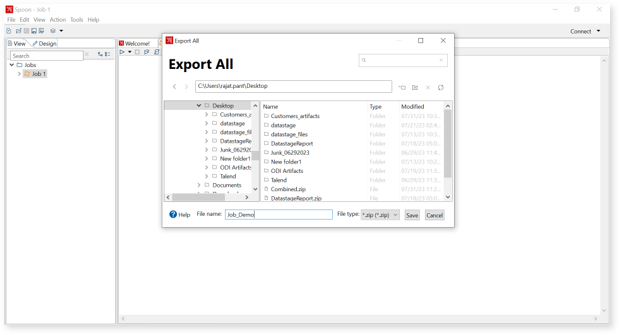

- Provide the location to save the job, provide the File Name and File Type as a zip file.

- Click “Save” to export the Pentaho Job to the selected location.

Now, we have successfully exported the Pentaho job from Spoon in the desired format. Please note that this method exports individual jobs manually, and if you want to export multiple jobs in one go, you will need to repeat this process for each job.

Pentaho Bulk Job Extraction via Command Line

For bulk export of multiple jobs, consider using other approaches like the Kitchen command-line tool with a Kitchen transformation file (KTR) to automate the export process. Alternatively, the Pentaho User Console (PUC) provides a way to export multiple jobs from the repository in one go, as described in the earlier response.

To perform a bulk export of Pentaho jobs and their dependent files (such as referenced transformations and sub-jobs) from the repository via the command line, you can use the Carte command-line tool along with a Kitchen script or batch file. Here’s how you can achieve this:

- Create a text file that contains the list of jobs you want to export: In a text editor, create a file (e.g., export_jobs.txt) and list the names of the jobs you want to export, each on a separate line:

JobName1

JobName2

JobName3

…

Replace JobName1, JobName2, JobName3, etc., with the actual names of the jobs you want to export.

- Create a Kitchen job export script or batch file: Create a new text file (e.g., export_jobs.ktr for Windows or export_jobs.sh for Linux/Mac) in the same directory as the Kitchen command-line tool (usually located in the PDI installation directory).

For Windows (export_jobs.ktr) will be an XML script like as given below:

<?xml version=”1.0″ encoding=”UTF-8″?>

<job>

<name>ExportJobs</name>

<entries>

<entry>

<name>ExportJobs</name>

<type>JobExport</type>

<description/>

<attributes>

<attribute>

<code>directory</code>

<name>directory</name>

<value>/path/to/your/exported_jobs/</value>

</attribute>

<attribute>

<code>exportType</code>

<name>exportType</name>

<value>zip</value>

</attribute>

<attribute>

<code>includeDependencies</code>

<name>includeDependencies</name>

<value>Y</value>

</attribute>

</attributes>

<parameters>

<parameter>

<name>exportedJobsFile</name>

<type>String</type>

<default>export_jobs.txt</default>

<description/>

</parameter>

<parameter>

<name>exportAll</name>

<type>String</type>

<default>Y</default>

<description/>

</parameter>

</parameters>

</entry>

</entries> </job>

For Linux/Mac (export_jobs.sh):

#!/bin/bash

/path/to/your/kitchen.sh \

/file:”/path/to/your/export_jobs.ktr” \

“/param:exportedJobsFile=/path/to/your/export_jobs.txt” \

“/param:directory=/path/to/your/exported_jobs/” \

“/param:exportType=zip” \

“/param:includeDependencies=Y” \

“/param:exportAll=Y”

- Save the script or batch file.

- Execute the Kitchen script or batch file:

For Windows: Double-click on export_jobs.ktr.

For Linux/Mac: Open a terminal, navigate to the directory containing export_jobs.sh, and execute the script with bash export_jobs.sh.

Kitchen will read the export_jobs.txt file and export the listed jobs along with their dependent files (if any) from the repository to the specified directory (/path/to/your/exported_jobs/) in a ZIP archive.

Additional Details

For additional details on Pentaho repo extraction, click here.