LeapFusion

LeapFusion is LeapLogic’s AI-driven feature designed to accelerate the modernization of legacy workloads. It automatically converts the queries that are not handled by the default LeapLogic Core transformation engine into optimized, target-native equivalents. It further validates the transformed queries leveraging other LLMs, referred to as ‘Validator Models’, and also suggest any corrections as required. LeapFusion streamlines complex query transformation – reducing manual effort, improving accuracy, and accelerating migration. It ensures seamless transformation of legacy workloads into modern cloud platforms.

In This Topic:

Key Features

LeapFusion delivers advanced, AI-driven capabilities to simplify and accelerate workload modernization, ensure consistency, and deliver highly accurate transformation outcomes. The key features of LeapFusion include:

Native integration with all Amazon Bedrock LLM models (referred as Enterprise AI models)

LeapLogic provides seamless integration with Amazon Bedrock, including access to Claude and other foundation models. These are referred to as Enterprise AI models within the platform, as they run natively in the cloud and are continuously updated by their providers. This integration allows you to take advantage of cutting-edge generative AI models without additional configuration or setup.

By relying on these managed environments, you gain flexibility in choosing from multiple models while ensuring enterprise-grade scalability, availability, and security. The native support removes friction in adoption, making it easier to leverage generative AI for a wide range of transformation and modernization needs.

Plug-and-Evolve architecture (Auto-syncs with new LLM releases without manual updates or engineering effort)

The Plug-and-Evolve architecture is designed to automatically align with new LLM releases, ensuring that LeapLogic users always have access to the latest advancements without needing manual intervention. This capability eliminates the operational burden of constant engineering updates or patch management, allowing teams to focus on business outcomes instead of infrastructure upkeep.

This approach also future-proofs AI-driven modernization pipelines. As new models become available, the platform automatically incorporates them, ensuring compatibility, performance improvements, and access to innovative features without disrupting existing workflows.

Open source LLMs adopted and trained on LeapLogic’s datasets and code formats (referred as Open-source AI models)

Alongside online integration, LeapLogic leverages open-source LLMs that are customized and trained on its own datasets and supported code formats. These are referred to as Open-source AI models, offering more control and flexibility in environments where cloud-based models are not feasible or preferred.

By aligning open-source LLMs with LeapLogic’s code and transformation patterns, the platform ensures consistent quality of outputs. This setup also helps organizations maintain compliance with specific data handling requirements while still benefiting from generative AI capabilities.

Dual-core and Hybrid AI Architecture

LeapLogic’s hybrid AI architecture combines deterministic pattern recognition (80–85%) with contextual generative reasoning (15–20%). This dual-core design ensures a balance between reliability and adaptability, producing outputs that are both accurate and context-aware.

The deterministic engine accelerates routine transformations with consistency, while the generative reasoning layer handles exceptions and nuanced scenarios. Together, they help compress project timelines, minimize manual intervention, and deliver more predictable modernization outcomes.

Situation-aware and Semantic-Preserving Prompt Injection Engine

The platform features a prompt injection engine that is both situation-aware and semantic-preserving. This ensures that prompts are tailored to the context of the transformation task while retaining the original meaning of the code or logic being converted.

By carefully constructing prompts, the engine reduces ambiguity and enhances the precision of LLM responses. This results in higher-quality transformations and lowers the risk of unintended changes during code modernization.

Intelligent Cascading DQ Checks and Validations

LeapLogic applies intelligent cascading data quality (DQ) checks and validations across multiple LLMs in a single conversion pipeline. This layered approach ensures that outputs are validated at different stages, ultimately converging on the best possible result.

By distributing checks across multiple models, the platform maximizes reliability and minimizes error propagation. Customers benefit from cleaner, more trustworthy transformations that reduce the need for rework.

Intelligent Recommendations for Conversational Interfaces

The system provides intelligent recommendations that extend to conversational interfaces, helping streamline analytics and BI workloads. These recommendations are designed to optimize query handling and resource utilization, which in turn lowers total cost of ownership (TCO).

By embedding AI-driven suggestions into conversations, LeapLogic helps users navigate complex workloads more efficiently. This improves both productivity and decision-making while keeping infrastructure and licensing costs in check.

Transparency and Auditability

LeapLogic ensures transparency and auditability into how AI transforms or rewrites code. Users can trace why a particular output was generated, which rules were applied, and how decisions were made during the process.

This visibility builds trust and enables teams to review, validate, or refine transformations with confidence. It also supports compliance with enterprise governance and audit requirements, making AI-driven modernization both explainable and verifiable.

Responsible AI Practices

Responsible AI is embedded into the platform’s design. LeapLogic applies fine-tuning only at the session level, ensuring that learning does not extend across customers or projects. This means no cross-customer sharing of data, knowledge, or transformations.

By isolating sessions in this way, the platform prioritizes privacy and confidentiality. Customers can rely on AI-powered transformations while knowing that their data and code remain fully secure and protected from external influence.

AI Models in LeapFusion

LeapFusion leverages AI-powered models to automate and optimize query conversion and validation. It employs the Transformer Model for intelligent query transformation and the Validator Model to ensure the accuracy of the transformed queries. By integrating both open-source LLMs and enterprise AI models through platforms like Amazon Bedrock delivers scalability, flexibility, and consistent transformation outcomes.

- Transformer Model: Converts queries that are not handled by the default LeapLogic Core transformation engine to the target equivalent. It supports both Open-source AI models (LeapLogic) and Enterprise AI models (Amazon Bedrock) for query transformation.

- Enterprise AI Models (Native integration with Amazon Bedrock such as Claude, Nova, etc., LLM models): LeapLogic natively integrates with Amazon Bedrock, giving you access to leading foundation models like Claude, Nova, and others without additional setup. These managed environments ensure scalability, security, and flexibility, so you can easily leverage generative AI for modernization and transformation needs.

- Open-source AI Models (Open source LLMs adopted and trained on LeapLogic’s datasets and code formats): LeapLogic leverages open-source LLMs, adapted and trained on its datasets and code formats to optimize transformation accuracy. These models offer flexibility, ensure compliance, and drive consistent modernization outcomes—while enabling organizations to uphold data-handling standards and harness generative AI capabilities.

- Validator Model: Validates the transformed queries and suggests corrections if needed.

The following table summarizes the AI models integrated within LeapFusion, outlining their roles and recommended use cases across ETL and EDW modernization.

|

LeapFusion Models |

AI Models |

Description |

|

ETL (Open-source AI Models) |

|

Energize |

openorca |

To convert small- to medium-sized

ETL scripts. |

|

Intercept |

codellama-34b |

To convert complex ETL scripts. |

|

Embark |

llama3.1:8b-instruct-q4_K_M |

To convert small- to medium-sized

ETL scripts. |

|

Velocity |

llama3.1:8b-instruct-fp16 |

To convert small- to medium-sized

ETL scripts. |

|

EDW (Open-source AI Models) |

|

Energize |

openorca |

To convert small- to medium-sized

SQL queries. |

|

Intercept |

codellama-34b |

To convert large-sized SQL queries

and procedural code. |

|

Embark |

llama3.1:8b-instruct-q4_K_M |

To convert small- to medium-sized

SQL queries. |

|

Velocity |

llama3.1:8b-instruct-fp16 |

To convert small- to medium-sized

SQL queries. |

|

EDW (Enterprise AI Models) |

|

Claude Sonnet 4 |

anthropic.claude-sonnet-4-20250514-v1:0 |

For large-scale, multi-file, and

complex conversions. |

|

Claude 3.7 Sonnet |

anthropic.claude-3-7-sonnet-20250219-v1:0 |

For moderately complex query

transformations and medium-sized modernization workloads. |

|

Claude 3.5 Sonnet |

anthropic.claude-3-5-sonnet-20240620-v1:0 |

For smaller modules and simple

transformations. |

|

Nova Lite |

amazon.nova-lite-v1:0 |

For faster processing and multimodal

use cases. |

|

Llama 3 70B Instruct |

meta.llama3-70b-instruct-v1:0 |

For structured language and query

translation, offering balanced performance for medium-sized workloads. |

|

Titan Text G1 - Express |

amazon.titan-text-express-v1 |

For straightforward query or code

conversions and automation. |

Workload Conversion Using LeapFusion

LeapLogic simplifies end-to-end transformation through its world-class Hybrid AI Modernization Engine. The Hybrid AI Modernization Engine combines the deterministic, pattern-driven LeapLogic Core engine with LeapFusion in a single conversion pipeline to deliver more accurate and optimized converted queries. Leveraging LeapFusion-an AI-driven intelligence, LeapLogic can automatically convert nearly 100% of legacy EDW workloads to modern cloud platforms, relying on its AI capability for about 15% of the automation.

Source and Target Configuration

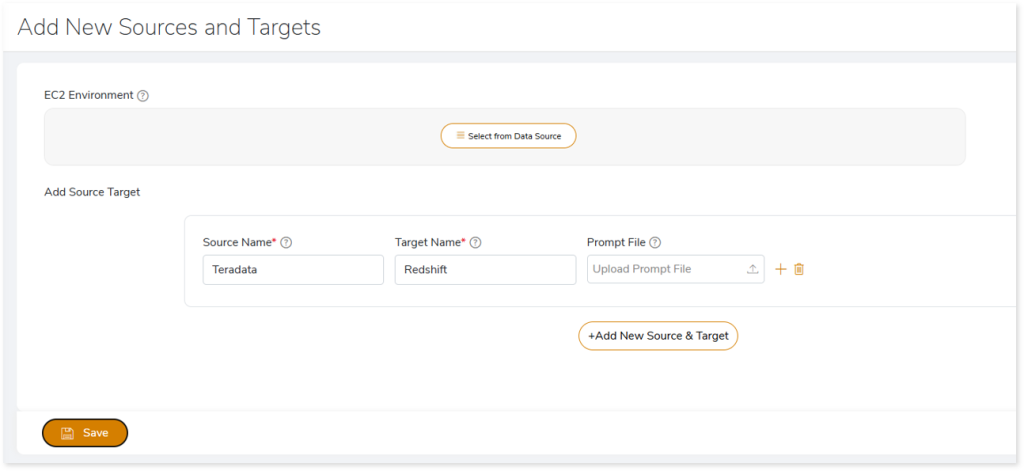

To enable workload conversion using LeapFusion, as a prerequisite, you need to configure the source and target on the Add New Sources and Targets page. For Open-source AI models in LeapLogic—which include Embark, Energize, Intercept, and Velocity—you also need to provide the EC2 instance connection details and a prompt file (.txt), in addition to the source and target configuration.

To add new sources and targets for LeapFusion, follow these steps:

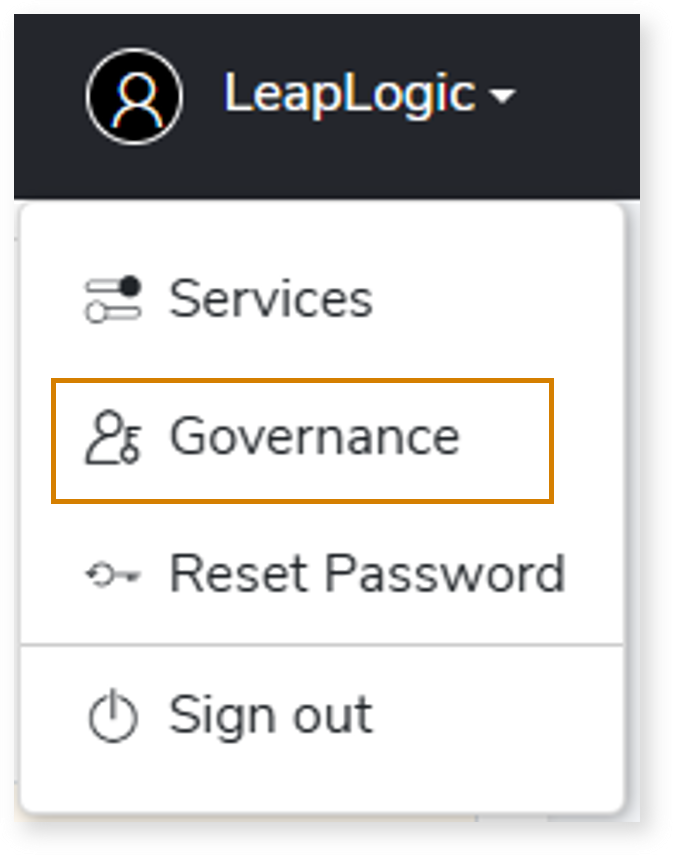

- Click your username at the top right corner of the screen.

- Click Governance from the menu.

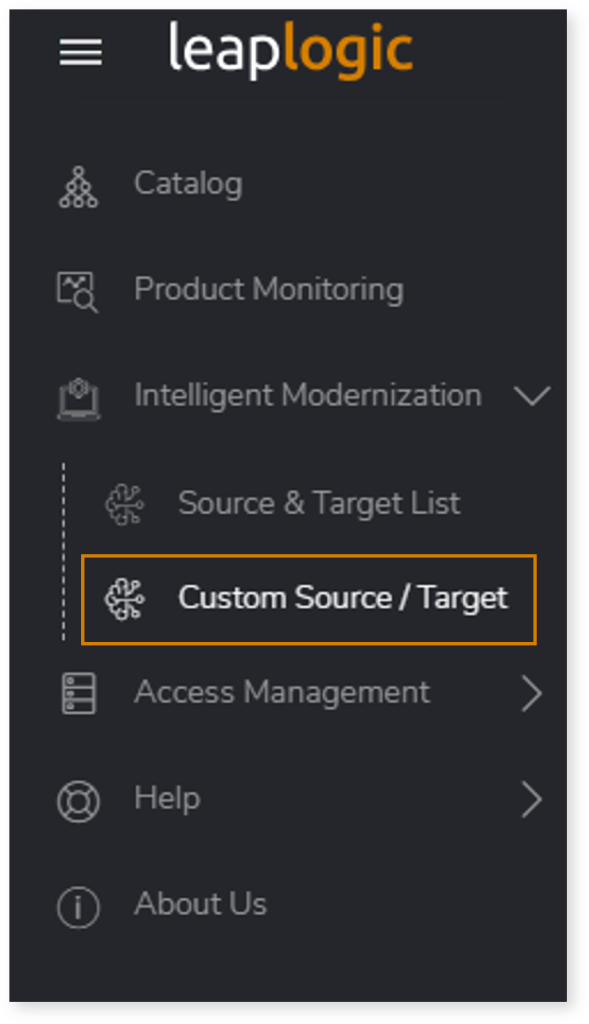

- Using the left navigation menu, choose Intelligent Modernization.

- Click on Custom Source / Target option.

- In EC2 Environment, select the data source which contains the EC2 instance connection details where you want to store the prompt file to run the Open-source AI transformation models.

- In Source Name, specify any source from which workloads need to be transformed, irrespective of whether it is supported by LeapLogic.

- In Target Name, specify any target such as Databricks Lakehouse, Snowflake, etc., to which workloads need to be transformed, irrespective of whether it is supported by LeapLogic.

- In Prompt File, upload the prompt file which contains additional customized instructions for more context. It includes a detailed list of source patterns and their corresponding target patterns, which are essential for ensuring a seamless transformation via LeapFusion.

- Click Save.

After completing these configurations, you can transform your legacy EDW workloads to a modern cloud platform using LeapFusion.

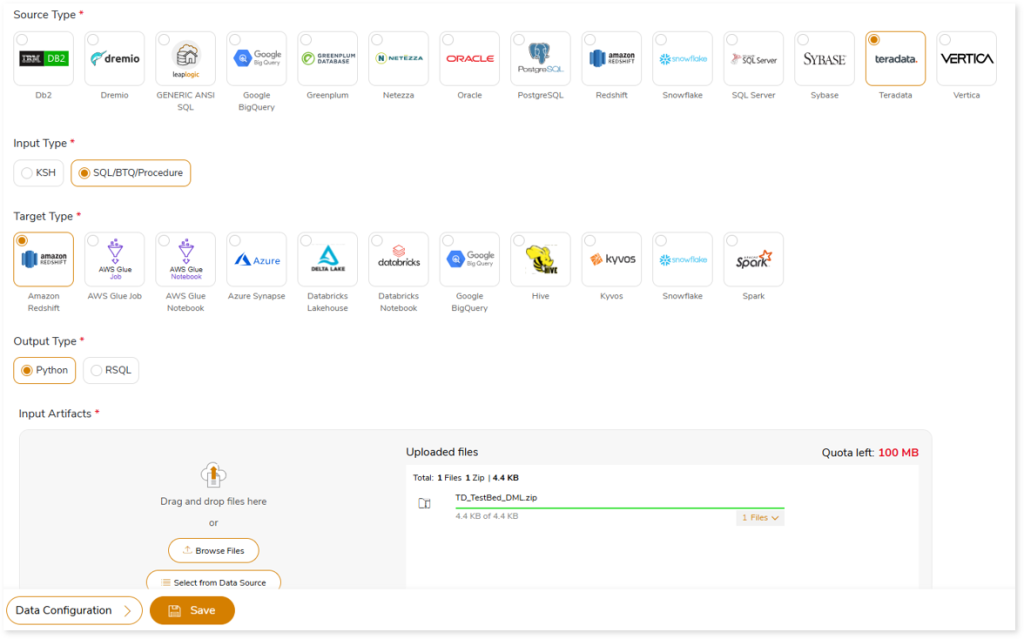

Steps to convert your legacy workloads Using LeapFusion

Follow these steps to convert your legacy workloads to the target cloud platform using LeapFusion.

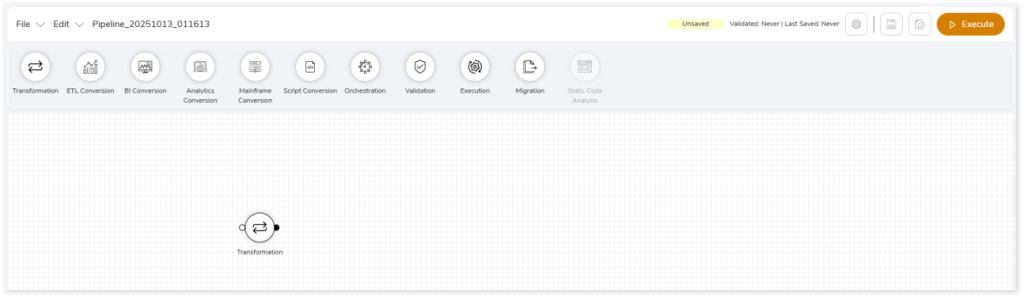

- Drag and drop the Transformation stage from the transformation library to the design page.

- Double-click the stages to open its configuration panel.

- Select the source type from which you need to transform your workloads.

- In Input Type, select the type of input script, such as SQL/BTQ/Procedure or KSH.

- Select the target type for the conversion.

- In Output Type, select the output type (such as Python, RSQL) to generate artifacts in that format.

- In Input Artifacts, upload the files that you need to transform to the target.

- Click Data Configuration.

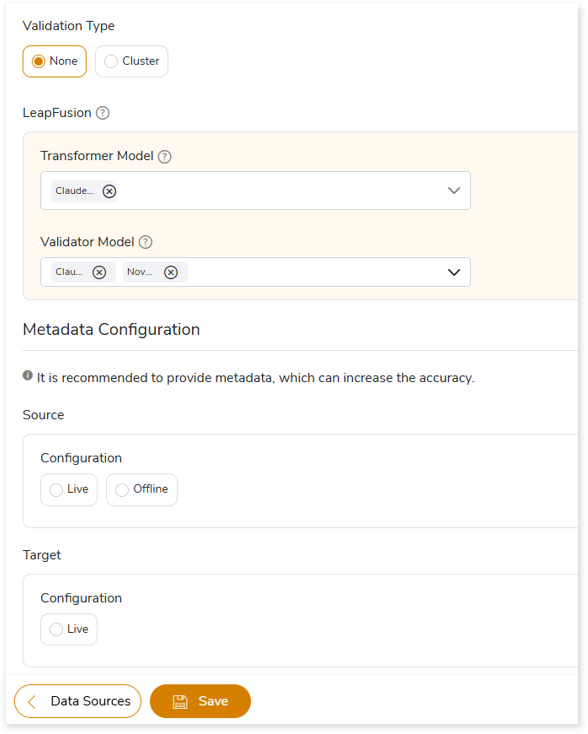

- In LeapFusion, select the preferred Transformer and Validator models to convert queries that require augmented transformation, and perform syntax validation for accuracy and optimal performance.

- Transformer Model: Select the preferred Transformer model to convert queries that are not handled by the default LeapLogic Core transformation engine to the target equivalent. The Transformer Model supports both Open-source AI models (LeapLogic) and Enterprise AI models (Amazon Bedrock such as Claude, Nova, etc.) for transformation.

- Validator Model: Select one or more Validator models to perform syntax validation of the queries that are transformed by the LeapLogic Core engine and the Transformer Model. The Validator model validates the transformed queries and suggests corrections, if needed. When multiple Validator models are configured:

- The first Validator model validates the queries transformed by the LeapLogic Core engine and the Transformer model. If any queries are identified as incorrectly transformed, it suggests updated queries.

- The updated queries are then passed to the next Validator model, which validates them and suggests corrections, if required.

- This process continues through all configured Validator models until the queries are successfully validated, and the optimized queries are generated.

- Click Save to save the Transformation stage.

- Save and Execute the pipeline

- Go to the pipeline listing page and click your pipeline card to view the reports after successful execution.

To view the Transformation report, visit On-premises to Cloud Transformation Report.