Configuring On-premises to Cloud Transformation

In the Transformation stage, the legacy codes and the business logic are transformed to the preferred target.

To configure the Transformation stage, follow the below steps:

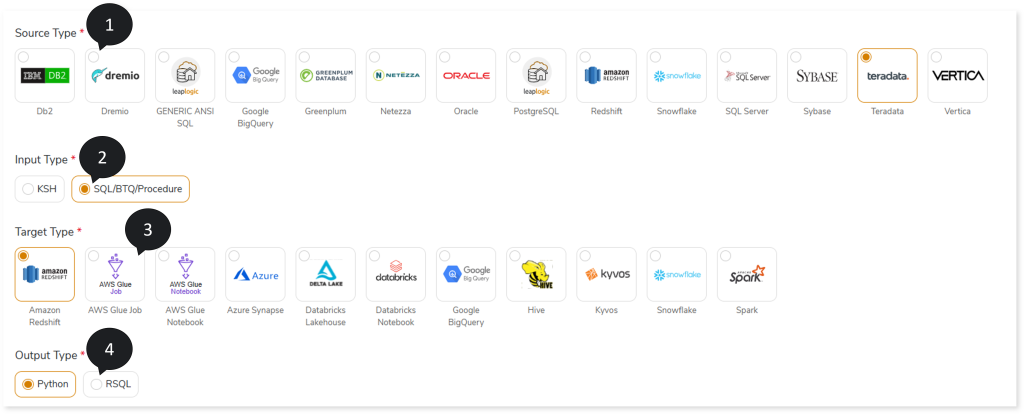

- In Source Type, select the required source data store, such as Netezza, Oracle, SQL Server, Teradata, Vertica, and more.

- In Input Type, select the type of input script, such as SQL/BTQ/Procedure or KSH.

- In Target Type, select the preferred target type, such as Spark, Snowflake, Amazon Redshift, and so on.

- In Output Type, select the output type (such as Python, RSQL) to generate artifacts in that format.

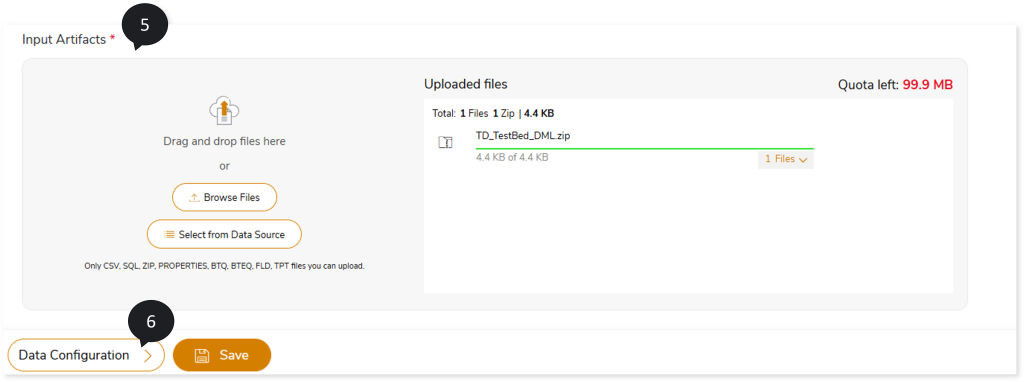

- In Input Artifacts, upload the files that you need to transform to the target source.

- Click Data Configuration to configure the data.

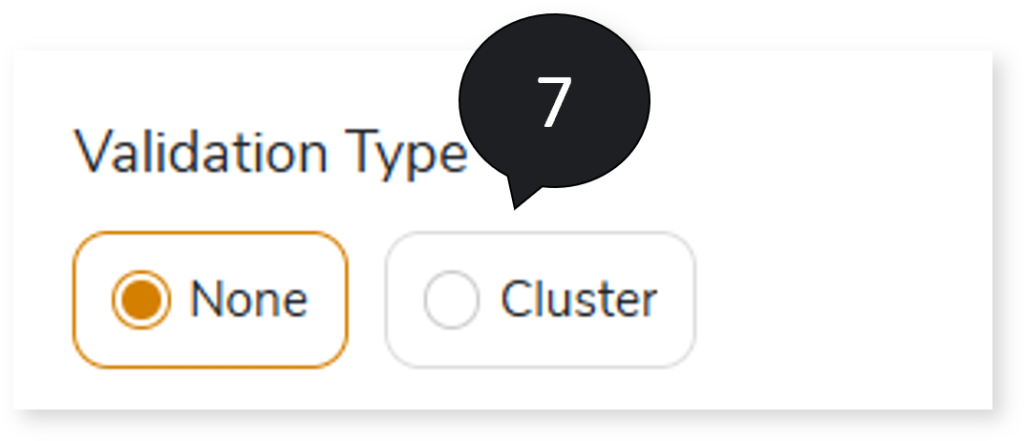

- In Validation Type, select the validation type:

- None: Performs no validation.

- Cluster: Syntax validation is performed on queries transformed by the LeapLogic Core transformation engine.

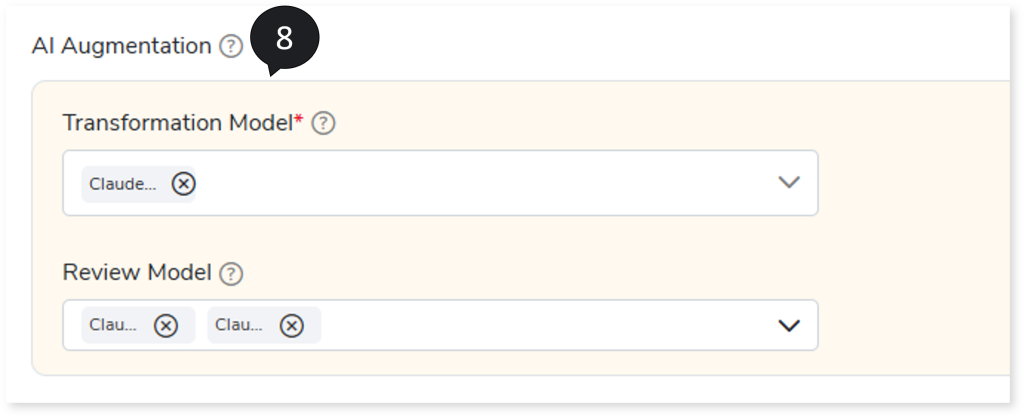

- In AI Augmentation, select the preferred Transformation and Review models to convert queries that require augmented transformation and perform syntax validation for accuracy and optimal performance. To convert queries using AI Augmentation, you must provide the source and target combinations in the Add New Sources and Targets page (Governance > Intelligence Modernization > Custom/ Source/ Target > Add New Sources and Targets)

- Transformation Model: Select the preferred Transformation model to convert queries that are not handled by the default LeapLogic Core transformation engine to the target equivalent. The Transformation Model supports both Open-Source AI models (LeapLogic) and Enterprise AI models (Amazon Bedrock such as Claude, Nova, etc.) for transformation. To enable the Open-Source AI models (LeapLogic), such as Llama 8B, 4-bit quantized Model (Ollama), Llama 8B, 16-bit quantized Model (Ollama), Code Llama 34B, GPTQ 4-bit quantized Model (Hugging Face), and OpenOrca 7B, GPTQ 4-bit quantized Model (Hugging Face), as a prerequisite you need to provide EC2 instance connection details and a prompt file (.txt) on the Add New Sources and Targets page, in addition to the source and target configuration (Governance > Intelligence Modernization > Custom/ Source/ Target > Add New Sources and Targets).

To view the detailed steps for providing EC2 instance connection details and the prompt file, click here.

The Open-Source AI models include:

- OpenOrca 7B, GPTQ 4-bit quantized Model (Hugging Face), Llama 8B, 16-bit quantized Model (Ollama), and Llama 8B, 4-bit quantized Model (Ollama): To convert small- to medium-sized SQLs.

- Code Llama 34B, GPTQ 4-bit quantized Model (Hugging Face): To convert large-sized SQLs and procedural code.

- Review Model: Select one or more Review models from to perform syntax validation on the queries transformed by the LeapLogic Core engine and the Transformation Model. The Review model validates the transformed queries and suggests corrections, if needed. You can configure multiple Review models. When multiple Review models are configured:

- The first Review model validates the queries transformed by the LeapLogic Core engine and the Transformer model. If any queries are identified as incorrectly transformed, it suggests updated queries.

- The updated queries are then passed to the next Review model, which validates them and suggests corrections, if required.

- This process continues through all configured Review models until the queries are successfully validated, and the optimized queries are generated.

This validation process ensures higher accuracy, better performance, and more efficient transformation.

To access this intelligent modernization feature (AI Augmentation), ensure that your account has the manager and llexpress_executor roles.

To view the detailed steps for assigning manager and llexpress_executor roles to your account, click here.

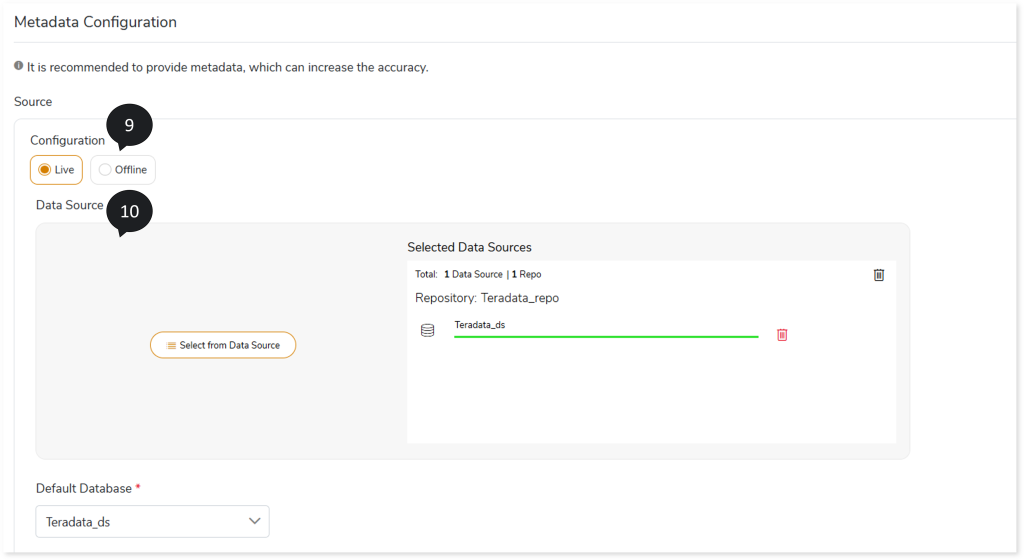

- In Source Configuration, select the source configuration as Live or Offline.

- If the selected Source Configuration is:

- Live: Upload the data source and select the Default Database.

- Offline: Upload the DDL files. It supports .sql and .zip file formats.

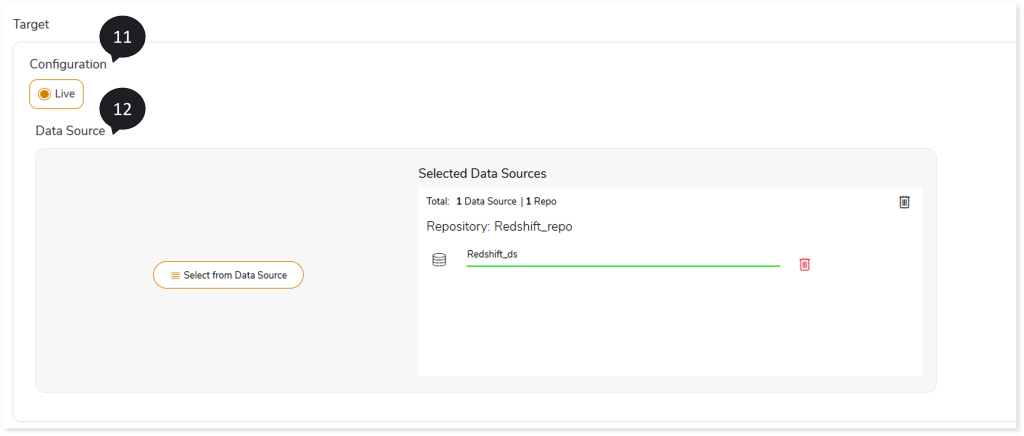

- In Target Configuration, select the target configuration as Live.

- Upload the target data source.

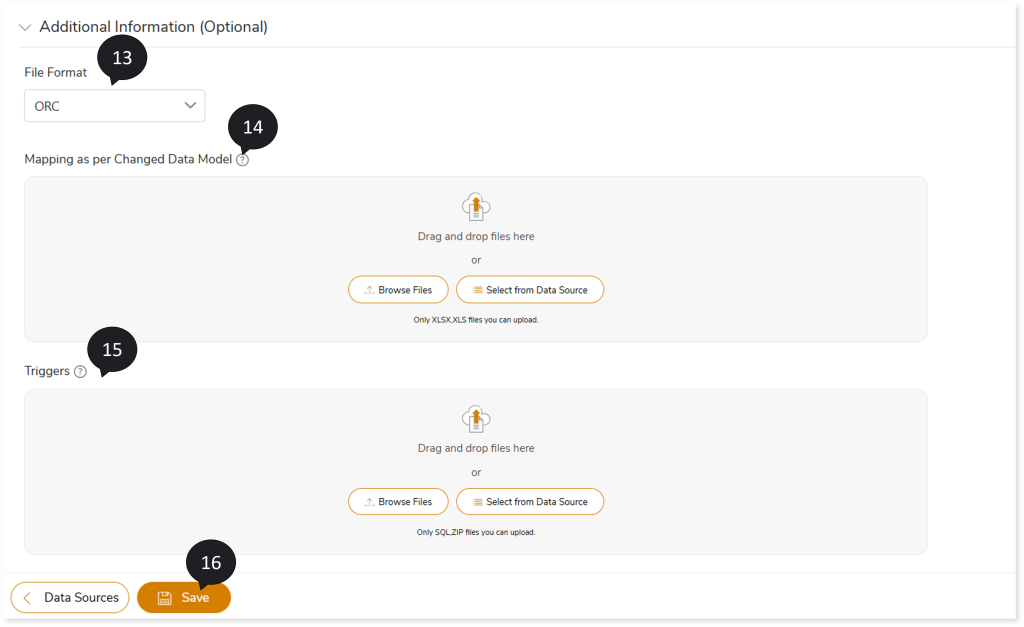

- In File Format, select the storage type as ORC, Parquet, Text File, or Avro

- In Mapping as per Changed Data Model, upload files for mapping between source and target tables.

- In Triggers, upload the trigger statements for a more accurate conversion.

- Click Save to save the Transformation stage.

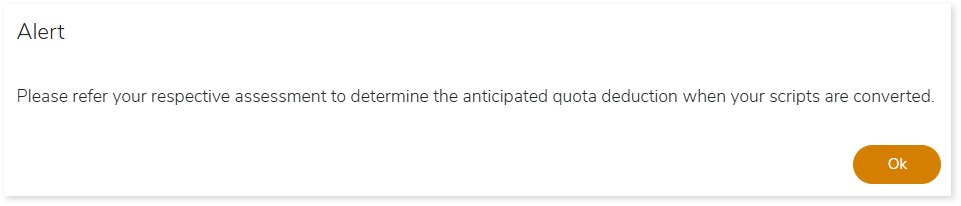

- An alert pop-up message appears. This message prompts you to refer your respective assessment to determine the anticipated quota deduction required when converting your scripts to target. Then click Ok.

- Click the Execute icon to execute the integrated or standalone pipeline. Clicking the Execute icon navigates you to the pipeline listing page which shows your pipeline status as Running state. It changes its state to Success when it is completed successfully.

- Click on your pipeline card to see reports.

To view the On-premises to Cloud Transformation, visit On-premises to Cloud Transformation Report.